Trying to develop a fully rounded theory on why a massive star goes supernova and how the shockwave propagates through space is something of a challenge if you’re conducting your simulations in only one dimension — radially, or outwardly from the core — plus time.

The shocks frequently stalled, moving out in mass but not in radius, and so too did hopes for validating certain theories.

While there were advancements along the way, such was the state of affairs until the introduction of “big iron,” a reference to the current batch of world-class supercomputers, like those Burrows has been using at the Argonne Leadership Computing Facility (ALCF), a DOE Office of Science User Facility.

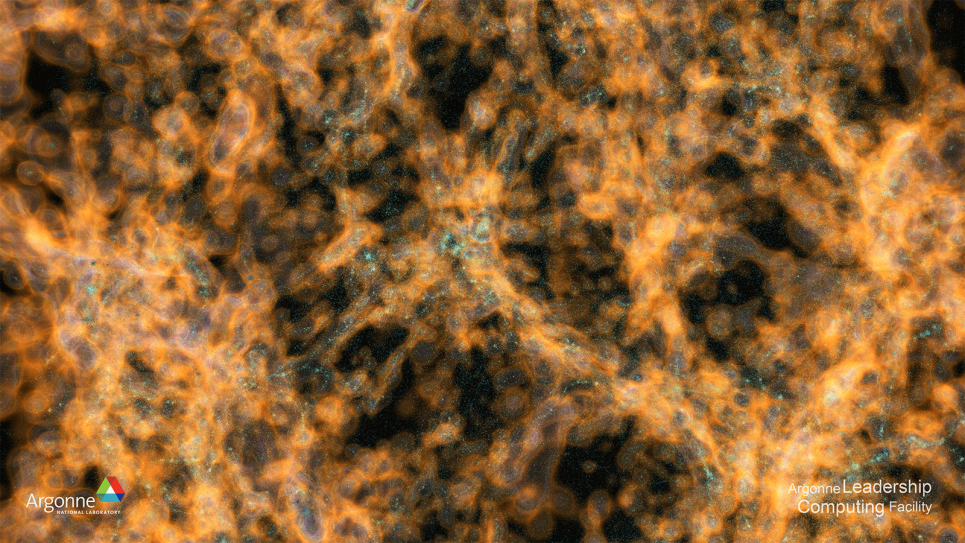

Machines like the ALCF’s Theta provide the power researchers require to effectively compute the exponential complexity of simulations when accounting for highly complicated dynamics like neutrino-matter interactions and turbulence, among others.

“And if you have to do turbulence, you go from one dimension, the radial dimension, to three dimensions plus time, of course,” noted Burrows. “And with every dimension, you might go up by a factor of 200 in computational requirements. So, if you’re going from one dimension to three dimensions, you’re going 200 times 200 times more.

“It turns out to be a really complicated problem if you do it right.”

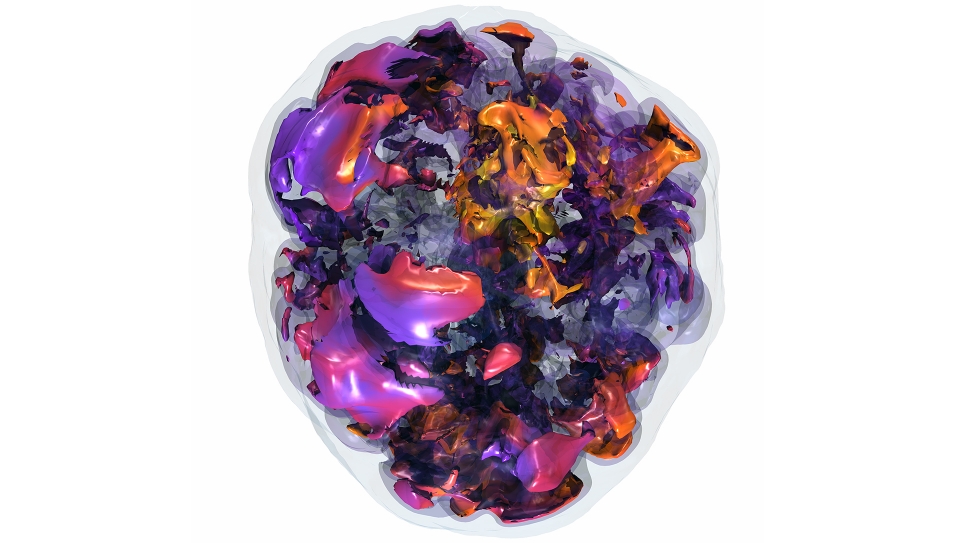

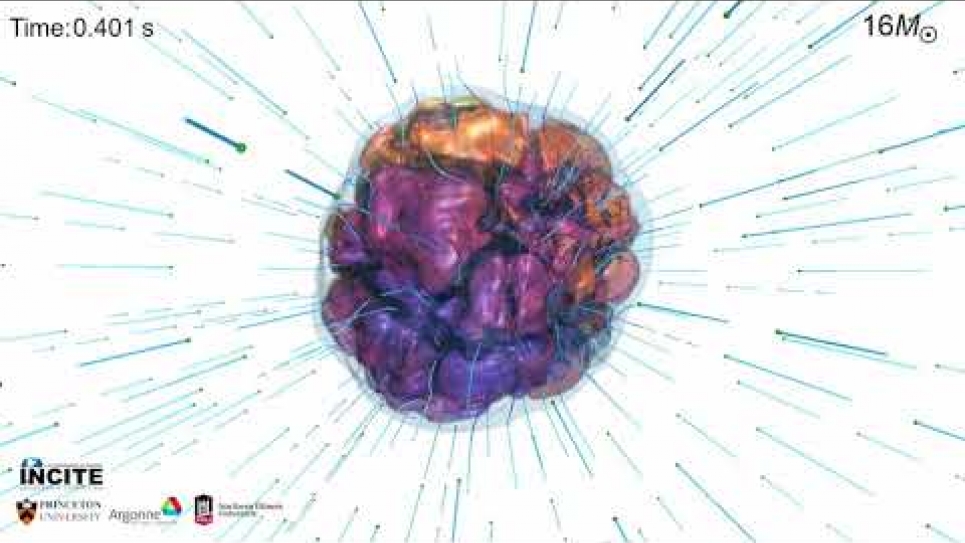

It also turns out that some things explode more easily in 3D and 3D simulations offer a clearer window on how that occurs. The picture that’s emerging suggests that the turbulent pressure of the exploding star matter is the central factor in the explosion.

The key players in this reaction, it appears, are neutrinos, which are created prodigiously in environments of super-high energies and densities, like that of a collapsing star. As they are driven outward, the neutrinos separate from matter and deposit some of their energy behind the shockwave.

This energy deposition, according to Burrows, is like setting off TNT to reenergize the shock and keep it from stalling, a consequence that would, in most cases, lead to the formation of a black hole.

“The neutrino energy deposition is crucial,” he added. “When you do the calculations in multiD, you get not only the neutrino energy deposition — that extra energy trying to revitalize the explosion — you also get the turbulent pressure. The two work together to push the explosion over the top most of the time.

“So, what we see more accurately in three dimensions is that most of our calculations actually explode. And this is a new state of affairs.”

The improvement in these calculations is driven in part by a state-of-the-art radiation/hydrodynamic code called Fornax, developed at Princeton, combined with “big iron” like Theta to capture the complexity and detail of this multiphysics problem.

Rendering a realistic but attainable simulation of the process includes the simultaneous simulation of the hydrodynamics and the radiative transfer — the numerical transfer of that neutrino energy — at the speed of light.

Recent practice asserted that the radiative transfer should be solved using an implicit method, which required that every single grid point of the simulation reached a solution that made sense at every time step before any solution at any grid point was found.

“There weren’t codes that could do that fully implicit solve over the whole grid and still scale well,” said Burrows. “So, you ended up with a big scaling hit when you reached the number of computer cores that were necessary to do a reasonable calculation.”

The team realized it could solve for the radiative transport using the more localized solving method they used for the hydrodynamics. While the speeds of light and sound are typically limiting factors within the domains of transport and hydrodynamics, respectively, the high densities in the centers of neutron stars make them similar enough to circumvent the need for the implicit approach.

“That all the solves were now local gave us about a factor of five speed-up, and the model was still stable. That was a breakthrough,” exclaimed Burrows.

The MNRAS paper also casts doubt on a long-held view that the compactness of the stellar core — a quantity proportional to the radius over mass at a specified interior mass — determined explosiveness, the authors noting that “we have found in recent studies in 2D and 3D that this naïve picture is not complete and that explodability is not correlated with compactness in a simple way.”

Early proponents suggested that only low-compactness would cause an explosion, but Burrows’ team found that both low- and high-compactness can trigger an explosion and those models in the middle ranges might not explode at all.

“It turns out that when you do these simulations in multiD, even in 2D, you get low-energy explosions,” said Burrows. “As you go up in compactness, it’s possible that you might not get supernovae at all. And when you go up to high-compactness, you get a nice vigorous explosion, but it might start a little bit later.”

While the team’s 3D simulations have invigorated several theories, Burrows admitted that there is still much left unanswered. It will require further simulation exploration, perhaps using the “big iron” that will be the ALCF’s Aurora, among the nation’s first exascale computers. It might hinge on neutrino interactions that we can observe in detail using current and future Earth-bound detectors.

And, there is always the further refinement of codes to get the right physics.

“We’ve been able to do 32 3D simulations in the last year and a half. That’s more than the rest of the world combined,” said Burrows. “However, the physics can always be improved, the numerics can always be improved, and so I will emphasize that these results, as interesting as they are, are provisional.”

Research details appear in the article “The overarching framework of core-collapse supernova explosions as revealed by 3D fornax simulations,” Monthly Notices of the Royal Astronomical Society, Nov. 28, 2019.

This work was supported by the DOE Office of Science and the National Science Foundation. ALCF computing time was awarded through DOE's INCITE program.

The Argonne Leadership Computing Facility provides supercomputing capabilities to the scientific and engineering community to advance fundamental discovery and understanding in a broad range of disciplines. Supported by the U.S. Department of Energy’s (DOE’s) Office of Science, Advanced Scientific Computing Research (ASCR) program, the ALCF is one of two DOE Leadership Computing Facilities in the nation dedicated to open science.

Argonne National Laboratory seeks solutions to pressing national problems in science and technology. The nation's first national laboratory, Argonne conducts leading-edge basic and applied scientific research in virtually every scientific discipline. Argonne researchers work closely with researchers from hundreds of companies, universities, and federal, state and municipal agencies to help them solve their specific problems, advance America's scientific leadership and prepare the nation for a better future. With employees from more than 60 nations, Argonne is managed by UChicago Argonne, LLC for the U.S. Department of Energy's Office of Science.

The U.S. Department of Energy's Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science