The annual workshop spans 3 days at Argonne National Laboratory. Image: Argonne National Laboratory

The annual workshop welcomed over 90 attendees to Argonne National Laboratory to work on improving application performance on ALCF systems.

The Argonne Leadership Computing Facility (ALCF), a U.S. Department of Energy (DOE) Office of Science user facility at DOE’s Argonne National Laboratory, regularly hosts hands-on training events to help researchers improve code performance on the lab’s high performance computing (HPC) and artificial intelligence (AI) resources.

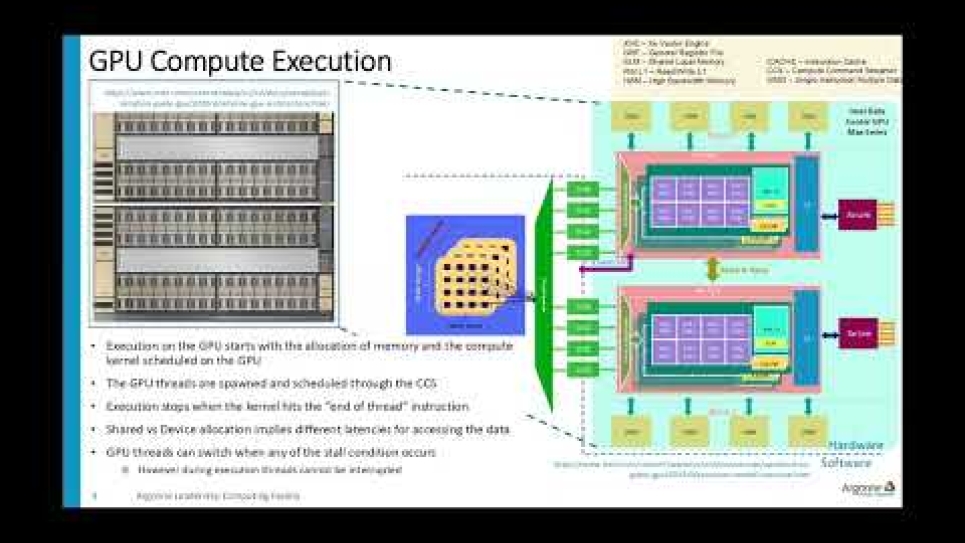

The facility recently welcomed over 90 attendees to Argonne for its annual Hands-on HPC Workshop. The event is designed to help participants port their applications to heterogeneous architectures and explore AI application development on ALCF systems, including Polaris and the AI Testbed.

“Our ALCF Hands-on HPC Workshop provided an avenue for the scientific computing community to learn about the most recent advances in HPC tools and research, along with topics such as large language models that are being developed at Argonne for scientific purposes,” says ALCF’s Yasaman Ghadar, who helped organize this year’s workshop. “The event is also aimed at preparing users for Argonne’s Aurora exascale machine which will soon be deployed for science.”

Throughout the workshop, participants were able to work directly with ALCF staff and industry experts during dedicated hands-on sessions where they could learn how to use tools and frameworks to improve the productivity of their code. Participants also heard from researchers running on Aurora hardware.

Overview of Aurora from the workshop. Aurora is now open to the research community.

Some highlights of the event include ALCF’s Thomas Uram covering Nexus, an Argonne effort to advance the development of DOE’s Integrated Research Infrastructure (IRI). The IRI program aims to seamlessly connect experimental facilities with supercomputing facilities to enable experiment-time data analysis.

Uram covered IRI tools and capabilities and discussed the various ways in which the initiative will simplify access to DOE computing resources, by providing robust solutions that are not tailored to any single facility.

In another presentation, the ALCF science team led a panel discussion on the DOE allocation programs —the Innovative and Novel Computational Impact on Theory and Experiment (INCITE) and the ASCR Leadership Computing Challenge (ALCC) programs–that allow researchers to apply for time on the facility’s computing resources.

“The INCITE/ALCC panel discussion gave attendees important context about our allocation programs,” says Raymond Loy, ALCF lead for training, debugging, and math libraries. “These programs are how users are granted large blocks of time on our machines, so helping users to fully understand these programs is key to our success at ALCF.”

To help attendees with data challenges, Greg Nawrocki provided an overview of Globus, a non-profit research data management service developed by the University of Chicago. Nawrocki, director of customer engagement at Globus, described how the platform can automate fast and secure data transfers, enabling data sharing and enhancing collaboration.

Globus' Greg Nawrocki provides an overview of Globus at the ALCF, a platform for research data management challenges.

In another talk, ALCF’Sam Foreman covered AuroraGPT, a foundation model being developed to support autonomous scientific exploration across disciplines. He outlined the project’s goals, including exploring pathways towards a “scientific assistant” model, facilitating collaboration with international partners, and making the software multilingual and multimodal.

The workshop concluded with a talk on next steps for attendees interested in applying for future allocations on ALCF supercomputers.

Visit the ALCF events page for upcoming training opportunities.