Accelerating AI inference for high-throughput experiments

An upgrade to the ALCF AI Testbed will help accelerate data-intensive experimental research.

The Argonne Leadership Computing Facility’s (ALCF) AI Testbed—which aims to help evaluate the usability and performance of machine learning-based high-performance computing (HPC) applications on next-generation accelerators—has been upgraded to include a GroqRack, an inference-driven AI system designed to accelerate the time-to-solution for complex science problems.

New research performed at the ALCF—a U.S. Department of Energy (DOE) Office of Science user facility located at Argonne National Laboratory—demonstrates the viability of such inference accelerators to operate in tandem with large-scale scientific instruments such as DOE's light sources and CERN's Large Hadron Collider.

Already used in high-performance computing campaigns to accelerate science like fusion and COVID-19 drug-discovery research, Groq systems can also operate as AI accelerators for edge computing applications.

Argonne’s Nexus effort, including edge computing infrastructure, aims to meld DOE experimental user facilities, data sources, and advanced computing resources.

“The massive amounts of data produced by scientific instruments such as light sources, telescopes, detectors, particle accelerators, and sensors create a natural environment for AI technologies to accelerate time to insights,” said Venkatram Vishwanath, ALCF Data Science Team Lead. “The addition of Groq systems means faster AI-inference-for-science, which in turn means more tightly integrated scientific resources.”

The Groq hardware and software ecosystem—built around unique architecture optimized for inference workloads—is designed to accelerate the time-to-solution for complex AI problems, for which it offers high-speed, low-latency inference. This makes the Groq machines well-suited for intensive, high-throughput applications such as large language model (LLM) inference, increasingly common in scientific HPC.

“But Groq’s especially low latency works equally well for something like real-time control of a experimental light source facility,” Vishwanath explained, “in which situation you would be running extremely high-throughput inferences based on every sample collected, as tends to be the case with contemporary leading-edge instruments.

“We’re working to make all of our systems—our current systems and the exascale Aurora system as it comes online—very AI-capable,” he said. “And as we expect our future systems to have even more AI capabilities, we also expect AI accelerators to remain a key component of ALCF HPC architecture.”

To this end, research teams are exploring the performance of computational tools and applications crucial for integrating AI-ready HPC with experimental data sources so as to assess Groq systems’ ability to control scientific instruments.

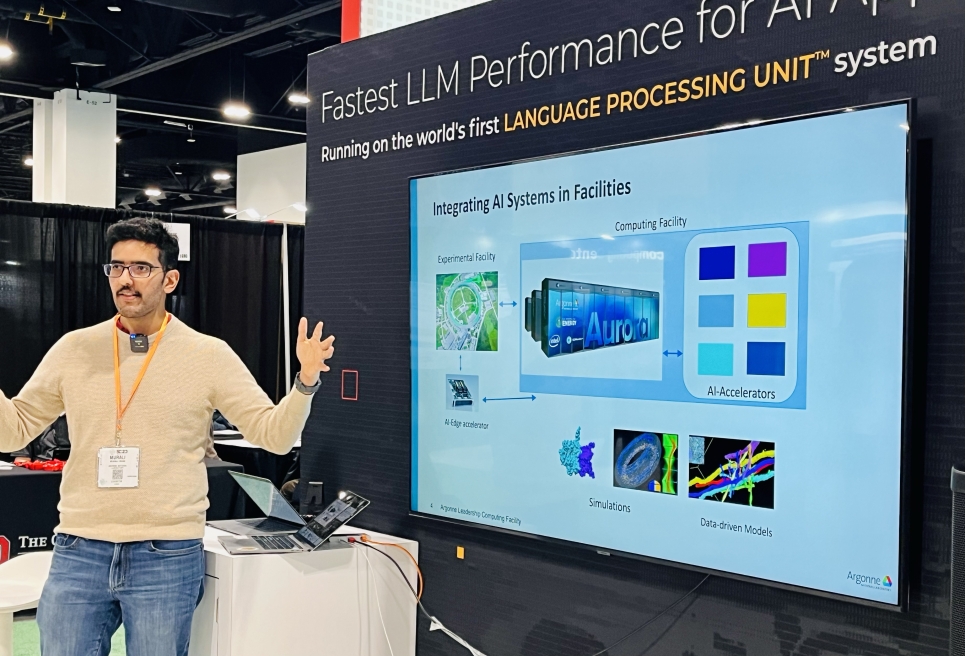

Murali Emani explains how researchers are integrating Groq's AI systems with large-experimental instruments.

Tools for real-time instrument control

Using three different API configurations, a study led by Kazutomo Yoshii, an Argonne software development specialist, examined and compared the Groq performance of computational tools important for real-time edge computing with scientific instruments—that is, tools necessary if Groq is to be positioned near experimental data sources.

Real-time application of both computational kernels evaluated by Yoshii’s team depends on strong performance.

Principal component analysis (PCA) is an easily implemented machine learning technique for reducing data size so as to overcome bottlenecks caused by the increasing amounts of data generated by scientific instruments.

The other kernel studied, Sobel Filter, uses AI to assist data reconstruction by assigning the correct charges to particles passing through high energy physics filters.

Both PCA and Sobel Filter exhibited strong performance across the three different Groq API configurations, but Yoshii’s team believes the performance of their target workload can be improved further still.

“We’re interested in improving our performance results by optimizing the implementation with advanced Groq API techniques and by testing the new C-based Groq runtime, which is expected to reduce runtime overhead,” he said.

Performance benchmarks for diffraction imaging

Zhengchun Liu, a computer scientist affiliated with Argonne National Laboratory, led a team in an evaluation of the inference performance of Groq when running BraggNN, a deep learning-based solution for fast analysis of Bragg diffraction data (used to measure the wavelengths of different types of crystals) for high-energy x-ray diffraction microscopy (HEDM). HEDM is a common practice at light source facilities.

“We picked BraggNN because HEDM experiments at light sources generate hundreds of diffraction frames—thousands as such facilities expand and upgrade—and each frame can contain thousands of diffraction spots,” Liu said. “HEDM is one of the primary techniques for high-resolution characterization of advanced materials; there is a practical need to process this massive quantity of data in real time, both to minimize storage needs and to enable better experiment steering.”

HEDM’s accuracy is computationally expensive and time-consuming, depending on precise knowledge of the position of diffraction peaks. Detecting diffraction peaks becomes only more computationally demanding as the science and related technologies evolve, posing the biggest hurdle to the on-the-spot processing necessary for real-time feedback.

“BraggNN, as a deep learning model, works to localize diffraction peaks far more rapidly than would otherwise be possible,” Liu said, “so our evaluation, using 13,792 samples from a recent HEDM experiment, included both model accuracy and throughput under different batch sizes.”

With initial BraggNN benchmarks established, Liu’s team plans to work with Groq team to further optimize the inference performance.

“Moreover,” Liu added, “we also hope to integrate one Groq chip directly with a detector at a scientific facility.”

Opportunity to get access and learn how to use ALCF's GroqRack

An upcoming virtual Groq AI Workshop on December 6 & 7 will introduce users to the Groq systems deployed within the ALCF AI Testbed.