AI at the Edge of Particle Physics

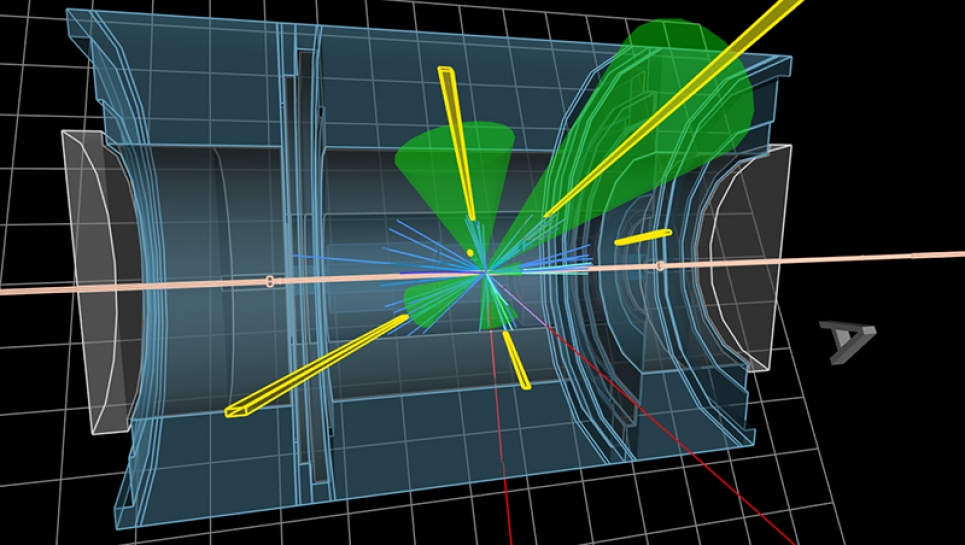

Abstract: Experimental particle physics is entering an unprecedented era. We are exploring the edge of our current understanding of the universe’s building blocks, known as the standard model. At the CERN Large Hadron Collider (LHC) located in Geneva, Switzerland, we collide protons at nearly the speed of light and analyze the debris from the collisions to learn about elementary particles like the Higgs boson. We are also at the technology frontier, where we need new detectors, new computing architectures, and new artificial intelligence (AI) algorithms to cope with the avalanche of data from the intense particle beams. In this seminar, I will explore how we are developing AI methods to confront two major challenges at the LHC: (1) efficiently generating billions of realistic simulated collisions to reduce the enormous computational burden and (2) quickly filtering millions of collisions per second on field-programmable gate arrays (FPGAs) in pursuit of new physics.

Bio: Javier Duarte is an Assistant Professor of Physics at the University of California San Diego and a member of the CMS experiment at the CERN Large Hadron Collider (LHC). He received his Ph.D. in Physics at Caltech and his B.S. in Physics and Mathematics at MIT.